Just a quick note to those in NZ and in the business of creating or designing Websites. Webstock is on again from Feb 11-15 in Wellington. See the site for a full list of speakers.

I haven't been before, but I believe it's a must go for those in the industry. Also my mate and former Boss, Hamish Fraser from Verb is a co-sponsor, so keen for it to go well for them.

I will get him to do a post conference write-up after the event.

Discussions relating to Software Development. Other rants thrown in for free

Thursday, January 31, 2008

Tuesday, January 22, 2008

1st .NET NZ London meet up wrap

We held our inaugral meet up on Saturday. Daniel Robinson kindly offered his place as a venue, and even kindlier supplied Drinks and Nibbles. Present were Daniel, Nic and myself. Andrew Revell was in Iceland so couldn't come.

It was nice to finally put faces to names, especially Nic, whom I have known (virtually) since the late 90's from the Delphi User Group.

Topic's of conversation varied from non technical observations of the UK we had so far to Linq and WPF on the technical side. We were all in agreement at how backward a lot of systems are in the UK, and how getting things setup can be a nightmare. Oh and how quickly the cash we bought from NZ to live on gets burned!

We are aiming to do this at least once a month, and hoping numbers to grow as people come over, or other Kiwi's in the UK find out. We should have at least two new faces next time, as Nick and Shekhar are landing in the next few weeks.

If you are an antipodean in the UK of a technical bent, then feel free to get in touch by placing a comment and I will add you to the contact list. Otherwise, if you are heading over here from NZ in the coming weeks and months get in touch also - advice freely given also!

It was nice to finally put faces to names, especially Nic, whom I have known (virtually) since the late 90's from the Delphi User Group.

Topic's of conversation varied from non technical observations of the UK we had so far to Linq and WPF on the technical side. We were all in agreement at how backward a lot of systems are in the UK, and how getting things setup can be a nightmare. Oh and how quickly the cash we bought from NZ to live on gets burned!

We are aiming to do this at least once a month, and hoping numbers to grow as people come over, or other Kiwi's in the UK find out. We should have at least two new faces next time, as Nick and Shekhar are landing in the next few weeks.

If you are an antipodean in the UK of a technical bent, then feel free to get in touch by placing a comment and I will add you to the contact list. Otherwise, if you are heading over here from NZ in the coming weeks and months get in touch also - advice freely given also!

Wednesday, January 16, 2008

Keynote prediction results

Here are the predictions I made with the results:

5) Desktop between Mac Pro and iMac

No Sadly.

6) Refresh to Apple Cinema Displays

Again No.

My out of Left Field item: Newton like device

1) iPhone 2 or some sort of new iPhone.

Wrong. Just a software update.

2) "Touch" SDK

Yes, but had already been mentioned.

3) Sub-Notebook Mac

Yes, but it was fairly common knowledge

4) iTunes video rentals

I Said:

This looks a certainty, although probably in the US only for a start, so not too worried about this announcement. If this is true, then an upgraded Apple TV might be needed to push videos from iTunes to the TV?

Wrong. Just a software update.

2) "Touch" SDK

Yes, but had already been mentioned.

3) Sub-Notebook Mac

Yes, but it was fairly common knowledge

4) iTunes video rentals

I Said:

This looks a certainty, although probably in the US only for a start, so not too worried about this announcement. If this is true, then an upgraded Apple TV might be needed to push videos from iTunes to the TV?

Got it in one with that one! I pat myself on the back for that one - no one else that I saw was predicting a refresh to the ATV

5) Desktop between Mac Pro and iMac

No Sadly.

6) Refresh to Apple Cinema Displays

Again No.

My out of Left Field item: Newton like device

No dammit.

So 3/6 for 50%. Not too bad I guess for my first try.

Highlights from the Keynote

The keynote is over and the highlights are iTunes rental and the updated Apple TV and of course the MacBook Air. Hate the name, love the computer. I can't wait to see one in person, but from the videos it's tiny.

Go to the Apple Website for all the video action.

They have just pushed out an update to iTunes, Front Row and QuickTime. Along with these updates, they have fixed the Dock and the menu, on the quiet too I might add. Well it appears to my eyes anyway. Pic below doesn't do justice, but the reflection is different - I can't put my hand on what exactly, but it's definitely been changed.

Tuesday, January 15, 2008

Why didn't I get the press release?

Oh man I want one of these.

It's a shame I only just found it now. Would have been a great Xmas present. It measures 5.5cm in length so it's tiny. They also do one with Scissors (S4), but the Knife would do everything the scissors would.

I actually came across indirectly from Jeff Atwoods latest post about what is on his Keychain this year.

For the record, my keychain is boring.

It contains:

It's a shame I only just found it now. Would have been a great Xmas present. It measures 5.5cm in length so it's tiny. They also do one with Scissors (S4), but the Knife would do everything the scissors would.

I actually came across indirectly from Jeff Atwoods latest post about what is on his Keychain this year.

For the record, my keychain is boring.

It contains:

- Keys

- Liverpool FC Badge

- The Stig

- And soon to be a Leatherman Squirt P4

Monday, January 14, 2008

Keynote Predictions:

Steve Jobs will kick off 2008 at the MacWorld Expo tomorrow, giving the Keynote to conference, which he does every year.

It's Apple fans favourite time of the year, with Jobs announcing new products, or updates to old favourites. Last year for example, the iPhone was announced to great fanfare.

The other favourite past-time for Apple pundits this time of year is predicting what will be announced. I have heard everything from a sub-notebook through to a docking station that includes monitor, looking a little like the iMac.

Having read and studied the rumour sites and analysts predicitions, here is a list of the things I think we will see announced (in no particular order):

1) iPhone 2 or some sort of new iPhone.

Whether this be a 3G iPhone, or a rumoured iPhone Nano, there will definitely be an iPhone announcement.

2) "Touch" SDK

This has already been announced, but I think the iPhone SDK will also include iPod Touch and other future touch enabled Apple Devices or computers. I also predict that all software will be released though iTunes, and have to be certified by Apple.

3) Sub-Notebook Mac

There are various rumours about the form factor, and whether this would include a Touch Screen, but it looks certain some form of sub-notebook will be announced. Rumours have it that it will be called the Macbook Air. It would be great if this machine had touch, but I can't see. It would also be great if it had the docking machine I blogged about, but it probably won't.

4) iTunes video rentals

This looks a certainty, although probably in the US only for a start, so not too worried about this announcement. If this is true, then an upgraded Apple TV might be needed to push videos from iTunes to the TV?

5) Desktop between Mac Pro and iMac

This would be great, a machine with iMac like specs, but not with built in monitor. Should be cheaper than an iMac, or if dearer then not much. So the line up would be MacMini, MacTower(? my name), iMac, MacPro.

A price around $8-900 US would be great. Some analysts predict it come in between iMac and Mac Pro. I think it needs to come between Mini and iMac to be a real consumer item. Although, Apple have never been too concerned about being expensive.

6) Refresh to Apple Cinema Displays

It's been a while since the Cinema Displays have been refreshed, so I expect a new range to be announced. Maybe with greater integration with Apple TV built in?

My out of Left Field item: Newton like device

Ok, so this would be me dreaming, but a device bigger than an iPhone, but smaller than a tablet, with a 800x600 screen, Wifi and 3G would be amazing. With the iPhone interface, It would be the ultimate geek tool. Enough grunt to do most computer tasks (with Bluetooth Keyboard), and some sort of dock which allowed plugin to monitor when back at office/home. iPod built in, and almost HD screen it would be great for video too. Not going to happen, but if it did you would hear 1 million geeks cry out in ejaculatory unison.

It's Apple fans favourite time of the year, with Jobs announcing new products, or updates to old favourites. Last year for example, the iPhone was announced to great fanfare.

The other favourite past-time for Apple pundits this time of year is predicting what will be announced. I have heard everything from a sub-notebook through to a docking station that includes monitor, looking a little like the iMac.

Having read and studied the rumour sites and analysts predicitions, here is a list of the things I think we will see announced (in no particular order):

1) iPhone 2 or some sort of new iPhone.

Whether this be a 3G iPhone, or a rumoured iPhone Nano, there will definitely be an iPhone announcement.

2) "Touch" SDK

This has already been announced, but I think the iPhone SDK will also include iPod Touch and other future touch enabled Apple Devices or computers. I also predict that all software will be released though iTunes, and have to be certified by Apple.

3) Sub-Notebook Mac

There are various rumours about the form factor, and whether this would include a Touch Screen, but it looks certain some form of sub-notebook will be announced. Rumours have it that it will be called the Macbook Air. It would be great if this machine had touch, but I can't see. It would also be great if it had the docking machine I blogged about, but it probably won't.

4) iTunes video rentals

This looks a certainty, although probably in the US only for a start, so not too worried about this announcement. If this is true, then an upgraded Apple TV might be needed to push videos from iTunes to the TV?

5) Desktop between Mac Pro and iMac

This would be great, a machine with iMac like specs, but not with built in monitor. Should be cheaper than an iMac, or if dearer then not much. So the line up would be MacMini, MacTower(? my name), iMac, MacPro.

A price around $8-900 US would be great. Some analysts predict it come in between iMac and Mac Pro. I think it needs to come between Mini and iMac to be a real consumer item. Although, Apple have never been too concerned about being expensive.

6) Refresh to Apple Cinema Displays

It's been a while since the Cinema Displays have been refreshed, so I expect a new range to be announced. Maybe with greater integration with Apple TV built in?

My out of Left Field item: Newton like device

Ok, so this would be me dreaming, but a device bigger than an iPhone, but smaller than a tablet, with a 800x600 screen, Wifi and 3G would be amazing. With the iPhone interface, It would be the ultimate geek tool. Enough grunt to do most computer tasks (with Bluetooth Keyboard), and some sort of dock which allowed plugin to monitor when back at office/home. iPod built in, and almost HD screen it would be great for video too. Not going to happen, but if it did you would hear 1 million geeks cry out in ejaculatory unison.

Friday, January 11, 2008

Buggar

RIP Sir Edmund Hillary.

It hard to express how I feel at this moment. Obviously sad, but more than that. When a nation loses an identity, an icon, a bloody legend how do you express that? The fact that the news of his passing has struck a chord worldwide, is a testament to the man.

It's hard for anyone who isn't a Kiwi to understand the impact this will have on our country. Sir Ed was the epitome of a New Zealander: adventurous, brave yet humble and self effacing, and through all his success and problems, he remained down to earth and a nice bloke. He got out and did what was believed couldn't be done with the pioneering, number 8 wire mentality we believe is in all Kiwis. I'm not just talking Everest, but his expeditions to the Antartic and his work in Nepal.

In the current media climate, where words like great and legend are bandied around so often they lose their meaning, this man truely deserved them. Helen Clarke called him, "the greatest person to ever be a New Zealander". It's pretty hard to disagree with that.

It's a sad day, but in remembering Sir Ed and his achievements, it makes you bloody proud to be a New Zealander. As a Kiwi living in the Uk, I don't really need telling, but it's times like this that remind you, you come from the best country in the world.

I thought I'd finish this post with a quote from Sir Ed that says it all really. On announcing he had reached the top of Everest, " We knocked the bastard off".

It hard to express how I feel at this moment. Obviously sad, but more than that. When a nation loses an identity, an icon, a bloody legend how do you express that? The fact that the news of his passing has struck a chord worldwide, is a testament to the man.

It's hard for anyone who isn't a Kiwi to understand the impact this will have on our country. Sir Ed was the epitome of a New Zealander: adventurous, brave yet humble and self effacing, and through all his success and problems, he remained down to earth and a nice bloke. He got out and did what was believed couldn't be done with the pioneering, number 8 wire mentality we believe is in all Kiwis. I'm not just talking Everest, but his expeditions to the Antartic and his work in Nepal.

In the current media climate, where words like great and legend are bandied around so often they lose their meaning, this man truely deserved them. Helen Clarke called him, "the greatest person to ever be a New Zealander". It's pretty hard to disagree with that.

It's a sad day, but in remembering Sir Ed and his achievements, it makes you bloody proud to be a New Zealander. As a Kiwi living in the Uk, I don't really need telling, but it's times like this that remind you, you come from the best country in the world.

I thought I'd finish this post with a quote from Sir Ed that says it all really. On announcing he had reached the top of Everest, " We knocked the bastard off".

Cyclomatic complexity

In his last post , Shaun talked about the new Code Metrics feature in 2008, in particular Cyclomatic Complexity (CC).

Many of you out there might not have come across the term before so I thought I'd give a readers digest definition.

So what is CC? In a nutshell its a measure of complexity of some code. It is got by measuring the linearly independant paths in the measured code. What the hell does that mean?

Basically its the amount of branching your code does, with control statements like for or if.

Example

The above code has two branches. To do branch coverage, then two test cases are sufficient, to do path coverage then four test cases are needed. The cyclomatic complexity of this code is 3.

This is achieved (there are a few ways to calculate) by the following formula:

M = Number of Closed Loops + 1 (where M = CC)

So to cut the post short, the higher the CC number the more rigid the unit testing needs to be.

Many of you out there might not have come across the term before so I thought I'd give a readers digest definition.

So what is CC? In a nutshell its a measure of complexity of some code. It is got by measuring the linearly independant paths in the measured code. What the hell does that mean?

Basically its the amount of branching your code does, with control statements like for or if.

Example

The above code has two branches. To do branch coverage, then two test cases are sufficient, to do path coverage then four test cases are needed. The cyclomatic complexity of this code is 3.

This is achieved (there are a few ways to calculate) by the following formula:

M = Number of Closed Loops + 1 (where M = CC)

So to cut the post short, the higher the CC number the more rigid the unit testing needs to be.

Code Metrics in Visual Studio 2008

If there's one thing in a development project with which I have an almost unhealthy obsession it's statistics. I'm rarely happier than when I'm knee-deep in profiler output or digging through build statistics trying to work out where in the code coverage levels the unit testing needs beefing up. So when I discovered that the Team Developer versions of Visual Studio 2008 includes some new Code Metrics functionality I must admit it got me quite excited.

The metrics are available under the "Analyze" menu and allow you to scan selected projects or the whole solution. The results look like this:

The five indicators that are calculated for each project are:

Maintainability Index

This is basically a summary value which uses the results of the other calculations to produce a value from 0-100. The higher the value, the better the code is in terms of maintainability.

Cyclomatic Complexity

This is an interesting measure which is effectively the number of code paths through all methods in the project. The idea here is that the higher the number of decision points in the code the greater the level of code coverage required in the form of unit tests.

Depth of Inheritance

At the top level, the depth of inheritance is the maximum number of levels of inheritance for all types in the project. A high (and therefore deep) inheritance hierarchy can probably point to possible over-engineering of the class structure. It can also hinder maintenance as the more layers of inheritance, the more difficult it can be for someone to understand exactly where code for particular members lives.

Class Coupling

This metric is an indicator as to the level of coupling between classes in the module. This includes dependencies through properties, parameters, template instantiations, method return types etc. Obviously the current mantra with development, particularly in these times of TDD is that code should have high levels of cohesion and lower levels of coupling, this makes unit testing individual classes much easier to achieve.

Lines of Code

Finally, the lines of code count. I'm not entirely sure but I suspect that this is some approximation based on the IL generated for each method rather than a straight count of the physical lines of code in the source files. Obviously this is useful when drilling down through the namespaces and types to be able to see where there are methods that are possibly doing too much and could be good candidates for refactoring.

The metric I'm most interested in at the moment, being a TDD acolyte, is the Cyclomatic Complexity (CC) value. I'm a big fan of using code coverage analysis tools such as NCover as part of a continuous integration environment to keep an eye on the level of coverage that the current set of unit tests are providing and being able to target those parts of the project where additional testing is required. CC is another tool to help with that as by drilling down into projects and namespaces with high CC we can get down to the specific methods that have a high value and target those with extra unit tests.

So how exactly is Cyclomatic Complexity calculated? As a simple example have a look at the following code:

Obviously this is pretty standard stuff., but we're looking to count the number of possible paths through the method so here we go:

1) If the "list" parameter is null, the code will throw an exception.

2) The parameter contains an empty list. The code will skip the foreach and return 0.

3) The list is non-empty but none of the items have the required status so returns 0.

4) The list is non-empty one or more are the required status so the count++ is executed and the method returns the count.

So by my reckoning, we have a Cyclomatic Complexity here of 4, in a method which contains approximately 7 lines (so a high ratio.) And in theory, we would need our unit tests to cover these four scenarios in order to completely cover all code in the method.

One of the nice things about this method of analysis is that by using the decision points (foreach, if, for, while etc) as a basis for complexity we could use tools to automatically generate a lot of the unit tests for us.

The metrics are available under the "Analyze" menu and allow you to scan selected projects or the whole solution. The results look like this:

The five indicators that are calculated for each project are:

Maintainability Index

This is basically a summary value which uses the results of the other calculations to produce a value from 0-100. The higher the value, the better the code is in terms of maintainability.

Cyclomatic Complexity

This is an interesting measure which is effectively the number of code paths through all methods in the project. The idea here is that the higher the number of decision points in the code the greater the level of code coverage required in the form of unit tests.

Depth of Inheritance

At the top level, the depth of inheritance is the maximum number of levels of inheritance for all types in the project. A high (and therefore deep) inheritance hierarchy can probably point to possible over-engineering of the class structure. It can also hinder maintenance as the more layers of inheritance, the more difficult it can be for someone to understand exactly where code for particular members lives.

Class Coupling

This metric is an indicator as to the level of coupling between classes in the module. This includes dependencies through properties, parameters, template instantiations, method return types etc. Obviously the current mantra with development, particularly in these times of TDD is that code should have high levels of cohesion and lower levels of coupling, this makes unit testing individual classes much easier to achieve.

Lines of Code

Finally, the lines of code count. I'm not entirely sure but I suspect that this is some approximation based on the IL generated for each method rather than a straight count of the physical lines of code in the source files. Obviously this is useful when drilling down through the namespaces and types to be able to see where there are methods that are possibly doing too much and could be good candidates for refactoring.

The metric I'm most interested in at the moment, being a TDD acolyte, is the Cyclomatic Complexity (CC) value. I'm a big fan of using code coverage analysis tools such as NCover as part of a continuous integration environment to keep an eye on the level of coverage that the current set of unit tests are providing and being able to target those parts of the project where additional testing is required. CC is another tool to help with that as by drilling down into projects and namespaces with high CC we can get down to the specific methods that have a high value and target those with extra unit tests.

So how exactly is Cyclomatic Complexity calculated? As a simple example have a look at the following code:

public int CountItemsWithStatus(IList<Item> items, StatusCode status)

{

if(items == null)

{

throw new ArgumentNullException("items");

}

int count = 0;

foreach(Item item in items)

{

if(item.Status == status)

{

count++;

}

}

return count;

}

Obviously this is pretty standard stuff., but we're looking to count the number of possible paths through the method so here we go:

1) If the "list" parameter is null, the code will throw an exception.

2) The parameter contains an empty list. The code will skip the foreach and return 0.

3) The list is non-empty but none of the items have the required status so returns 0.

4) The list is non-empty one or more are the required status so the count++ is executed and the method returns the count.

So by my reckoning, we have a Cyclomatic Complexity here of 4, in a method which contains approximately 7 lines (so a high ratio.) And in theory, we would need our unit tests to cover these four scenarios in order to completely cover all code in the method.

One of the nice things about this method of analysis is that by using the decision points (foreach, if, for, while etc) as a basis for complexity we could use tools to automatically generate a lot of the unit tests for us.

Thursday, January 10, 2008

Working with LINQ to SQL and WCF Services (Part 1)

As James has already posted here there are concerns circulating around the web about the lack of any coherent guidance in terms of a good approach to using LINQ to SQL (or Entities for that matter) in an n-tier architecture. One of the issues I've been fretting over is what I refer to as the "types and references" issue, namely that with the approaches described in the MSDN articles covering the topic there's no real explanation as to how the entity types generated by the IDE from the dbml file can be consumed as deserialised entities on the client side without creating some kind of code "disconnect" between LINQ entities which are generated automatically from the DB schema and a different set of definitions which can be used by the client.

There are various possible solutions to this, many of which look promising at the start but ultimately end up being dead ends or not really practical. One approach that I have been able to get to work is outlined below. What we're going to do in this first part is simply create aWCF Service component which will return a list of Customers from the Northwind database and consume that component in a simple client.

The Data Component

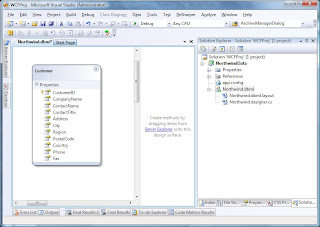

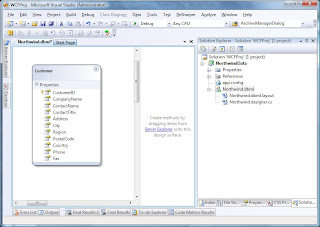

1. To start, we fire up Visual Studio 2008 and create an empty Solution. To that we want to add a Class Library project which we'll call NorthwindData.

2. Next, we add a dbml file to the project by going to "Add New" and selecting the "LINQ to SQL Classes" entry and call it Northwind.dbml. Then use Server Explorer to navigate or connect to your SQL Server and drag the Customers table onto the dbml designer surface from the Northwind database.

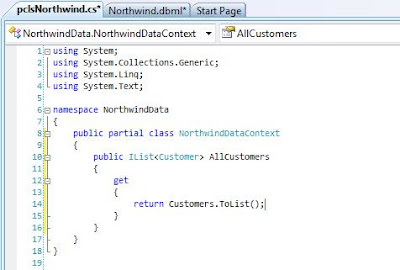

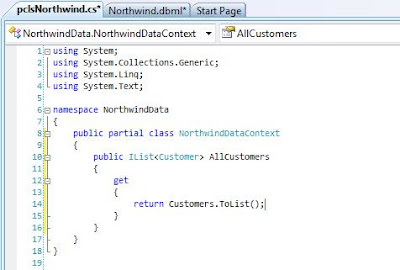

3. We now want to add a partial class so that we can add our own code to the generated DataContext. So add a new Class file and add the following code which will provide a method which returns all customers.

The WCF Service

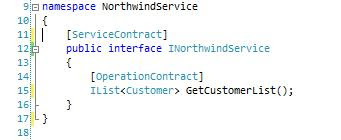

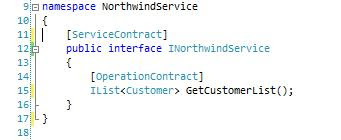

4. So far so good. But now it's time to create our WCF Service component. So add a New Project to the solution and select WCF Service Library, we'll call it NorthwindService. We now need to make a few changes to the service framework that VS has created for us. First we need to add references to the NorthwindData project and to System.Data.Linq. Then we need to change the default Interface and Service names etc so that they are a bit more meaningful for our purposes. To do this we first rename the IService1.cs file to INorthwindService.cs (when you are asked to rename all references say Yes otherwise you will have to change the name of the actual interface yourself too.)

In WCF, Service interfaces are defined as "Service Contracts" and are marked with the ServiceContractAttribute, the methods on those interfaces are marked with the OperationContractAttribute. What this basically does is specify that the interfaces and marked operations define a contract that is upheld by any service which advertises itself as implementing that interface. If you've done much work with web services then you'll be quite at home with this concept as what it is effectively doing is decorating the interface so that our service can advertise and describe itself in a way synonymous with the WSDL approach. At the moment we're only interested in returning one list of Customers so our contract is relatively simple. The interface should be defined as follows:

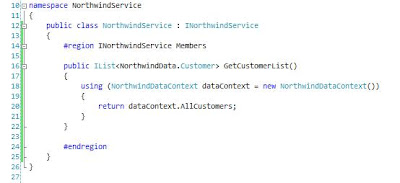

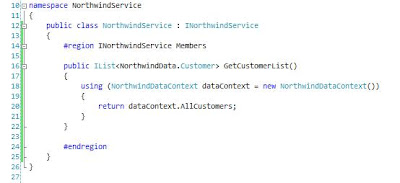

5. The next simple step is to provide an implementation of the interface in our component, so we rename Service1.cs to NorthwindService.cs (again allow VS to rename all references etc) and change the implementation code to the following:

There are various possible solutions to this, many of which look promising at the start but ultimately end up being dead ends or not really practical. One approach that I have been able to get to work is outlined below. What we're going to do in this first part is simply create aWCF Service component which will return a list of Customers from the Northwind database and consume that component in a simple client.

The Data Component

1. To start, we fire up Visual Studio 2008 and create an empty Solution. To that we want to add a Class Library project which we'll call NorthwindData.

2. Next, we add a dbml file to the project by going to "Add New" and selecting the "LINQ to SQL Classes" entry and call it Northwind.dbml. Then use Server Explorer to navigate or connect to your SQL Server and drag the Customers table onto the dbml designer surface from the Northwind database.

3. We now want to add a partial class so that we can add our own code to the generated DataContext. So add a new Class file and add the following code which will provide a method which returns all customers.

The WCF Service

4. So far so good. But now it's time to create our WCF Service component. So add a New Project to the solution and select WCF Service Library, we'll call it NorthwindService. We now need to make a few changes to the service framework that VS has created for us. First we need to add references to the NorthwindData project and to System.Data.Linq. Then we need to change the default Interface and Service names etc so that they are a bit more meaningful for our purposes. To do this we first rename the IService1.cs file to INorthwindService.cs (when you are asked to rename all references say Yes otherwise you will have to change the name of the actual interface yourself too.)

In WCF, Service interfaces are defined as "Service Contracts" and are marked with the ServiceContractAttribute, the methods on those interfaces are marked with the OperationContractAttribute. What this basically does is specify that the interfaces and marked operations define a contract that is upheld by any service which advertises itself as implementing that interface. If you've done much work with web services then you'll be quite at home with this concept as what it is effectively doing is decorating the interface so that our service can advertise and describe itself in a way synonymous with the WSDL approach. At the moment we're only interested in returning one list of Customers so our contract is relatively simple. The interface should be defined as follows:

5. The next simple step is to provide an implementation of the interface in our component, so we rename Service1.cs to NorthwindService.cs (again allow VS to rename all references etc) and change the implementation code to the following:

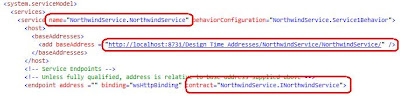

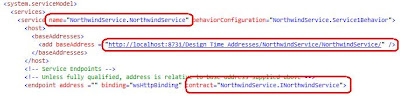

6. Finally to make our service useable, we need to update the config file so that the changes we have made to the names of the interfaces etc are registered correctly when the WCF Service is hosted. Open the App.Config file and change the contents to match the following:

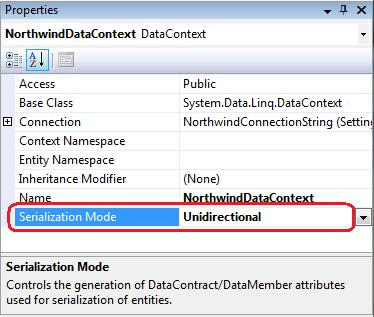

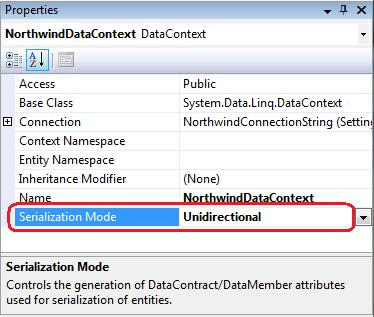

7. At this point, we only have one more tiny problem with our service. In point 4 we learned that interfaces exposed by WCF services specify a "contract" and this is also extended to any data objects which are passed through that interface, this is done using the DataContractAttribute (and the associated other attributes which define the members on the entity class.) So how do we get the entity objects generated by the IDE to be decorated with the required interfaces. This took a considerable amount of digging but in the end turned out to be a remarkably simple task. If we go back to our NorthwindData project and go to the properties of the dbml in the designer we can see a property called Serialization Mode. All we have to do is change the setting to "Unidirectional".

What this then does is automatically marks any of the entities generated from the dbml with the DataContract attributes.

It also means that the data definition of the entities can be included in the service definition of the interface contract. In a practical sense what this does is allow us to add a reference to the service and Visual Studio 2008 (utilising svcutil.exe under the hood) will read the metadata for the service and generate a client-side service proxy containing definitions of the LINQ entities for us.

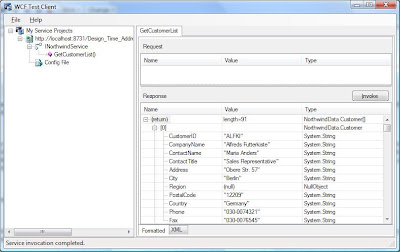

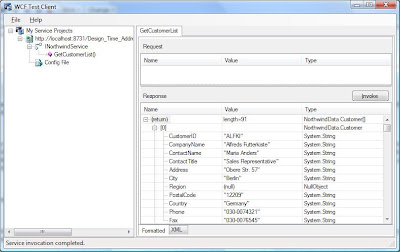

8. Let's test our service. Right-click on the NorthwindService project and select "Debug->Start New Instance..." This should force a compile and run the WCF Service which will be hosted by default in the "WCF Service Host" application which will place an icon in the notification area. If the service has been configured correctly the WCF Test Client should launch and after a second or so your service should be loaded into the tree view on the left. You can then expand the tree nodes to see the structure of the Service Interface and double click on the "GetCustomerList()" node. This will open a tab on the right which has an "Invoke" button which, when clicked, will call the method on the service and display the results in the lower box. As you can see below, the call returned 91 customer entries.

The Simple Client

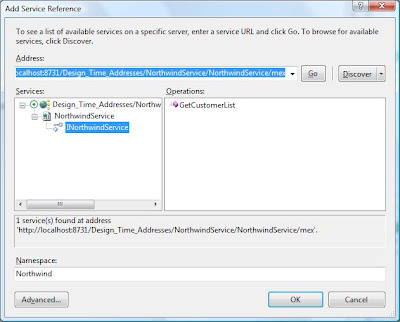

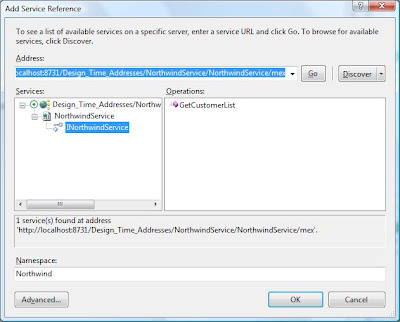

8. To create our simple client we'll add a console application project to our solution. This will serve our purposes in Part 1 as we're just interested in getting a list of customers back from the database but in future parts I hope to create a more complex and useable client using WPF. Add a console project called SimpleClient then right click on the References node in Solution Explorer and select "Add Service Reference..." If everything has gone right, you should be able to click the "Discover" button and the service details should appear in the tree on the left. When you expand the node, the service is instantiated and you can navigate to the interface definition. Before we commit the reference, change the Namespace entry at the bottom to Northwind. Finally, by default collections appear in the proxy as arrays, to make them a List as defined in the interface we need to click on the "Advanced..." button and change the "Collection Type:" dropdown to "System.Collections.Generic.List".

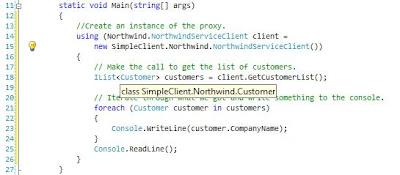

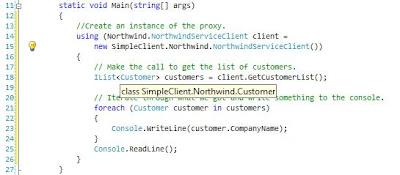

9 Click OK in the dialog, and the service reference will be added to the project. We can then add the following code to the Main method in Program.cs:

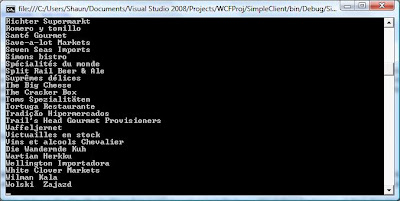

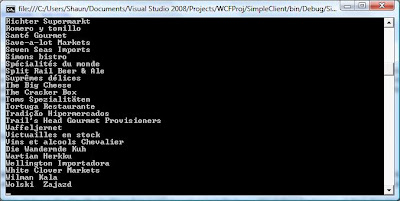

To test the simple application, ensure an instance of the service is running in the WCF Test Client (if one isn't, then right click the service project and select "Debug->Start New Instance".) then start an instance of the console application. All things being well, you should have a console window with a list of Northwind customers displayed.

Summary

So this is where we'll leave it for now. What we've achieved is fairly simplistic but what we have is an overall framework upon which we can build more complex behaviours such as updates and creation.

7. At this point, we only have one more tiny problem with our service. In point 4 we learned that interfaces exposed by WCF services specify a "contract" and this is also extended to any data objects which are passed through that interface, this is done using the DataContractAttribute (and the associated other attributes which define the members on the entity class.) So how do we get the entity objects generated by the IDE to be decorated with the required interfaces. This took a considerable amount of digging but in the end turned out to be a remarkably simple task. If we go back to our NorthwindData project and go to the properties of the dbml in the designer we can see a property called Serialization Mode. All we have to do is change the setting to "Unidirectional".

What this then does is automatically marks any of the entities generated from the dbml with the DataContract attributes.

It also means that the data definition of the entities can be included in the service definition of the interface contract. In a practical sense what this does is allow us to add a reference to the service and Visual Studio 2008 (utilising svcutil.exe under the hood) will read the metadata for the service and generate a client-side service proxy containing definitions of the LINQ entities for us.

8. Let's test our service. Right-click on the NorthwindService project and select "Debug->Start New Instance..." This should force a compile and run the WCF Service which will be hosted by default in the "WCF Service Host" application which will place an icon in the notification area. If the service has been configured correctly the WCF Test Client should launch and after a second or so your service should be loaded into the tree view on the left. You can then expand the tree nodes to see the structure of the Service Interface and double click on the "GetCustomerList()" node. This will open a tab on the right which has an "Invoke" button which, when clicked, will call the method on the service and display the results in the lower box. As you can see below, the call returned 91 customer entries.

The Simple Client

8. To create our simple client we'll add a console application project to our solution. This will serve our purposes in Part 1 as we're just interested in getting a list of customers back from the database but in future parts I hope to create a more complex and useable client using WPF. Add a console project called SimpleClient then right click on the References node in Solution Explorer and select "Add Service Reference..." If everything has gone right, you should be able to click the "Discover" button and the service details should appear in the tree on the left. When you expand the node, the service is instantiated and you can navigate to the interface definition. Before we commit the reference, change the Namespace entry at the bottom to Northwind. Finally, by default collections appear in the proxy as arrays, to make them a List

9 Click OK in the dialog, and the service reference will be added to the project. We can then add the following code to the Main method in Program.cs:

To test the simple application, ensure an instance of the service is running in the WCF Test Client (if one isn't, then right click the service project and select "Debug->Start New Instance".) then start an instance of the console application. All things being well, you should have a console window with a list of Northwind customers displayed.

Summary

So this is where we'll leave it for now. What we've achieved is fairly simplistic but what we have is an overall framework upon which we can build more complex behaviours such as updates and creation.

How good are your .NET coders?

Being a contractor is a balancing act. There are some positives, but just as many negatives. One of the positives, is that you see an awful lot of code. Exposure to code you haven't written is a great way to learn. You can pick up some tricks, or just as likely, see an implementation that is really bad and you get a chance to think how you would do it better. Unfortunately, most contractors never get a chance to fix the problems with code. Permies can be threatened by contractors and don't like you messing with their code.

It's understandable. They don't know how good you are or aren't, so why let this new guy loose on their code.

Anyway, this is absoultely nothing to do with the topic of this post. I just wanted to elaborate that I have seen a lot of codebases over the years. A lot.

The overwhelming conclusion I have found recently is this: If your developers have come from a certain background, say C++ or VB, don't assume they are going to be great C# coders off the bat.

The biggest problem I see is people move away from C++ and VB and instead of understanding and learning C#, they just apply their language X knowledge to C#. What comes out is a hybrid mess of ugliness.

Code that is not quite OO, not quite procedural, not quite anything actually.

So, if you are a development shop looking to move to .Net: take time to actually learn the language. Don't just assume that it's C++ with a garabage collector, or VB with curly braces. It's a bit like assuming that French is just English with an accent, and a vague hint of garlic.

It's understandable. They don't know how good you are or aren't, so why let this new guy loose on their code.

Anyway, this is absoultely nothing to do with the topic of this post. I just wanted to elaborate that I have seen a lot of codebases over the years. A lot.

The overwhelming conclusion I have found recently is this: If your developers have come from a certain background, say C++ or VB, don't assume they are going to be great C# coders off the bat.

The biggest problem I see is people move away from C++ and VB and instead of understanding and learning C#, they just apply their language X knowledge to C#. What comes out is a hybrid mess of ugliness.

Code that is not quite OO, not quite procedural, not quite anything actually.

So, if you are a development shop looking to move to .Net: take time to actually learn the language. Don't just assume that it's C++ with a garabage collector, or VB with curly braces. It's a bit like assuming that French is just English with an accent, and a vague hint of garlic.

Stop Vista's Update Auto-Reboot, permanently!

It's a story with Vista and XP that has played out over and over again across the lands. So you've been working away at some stuff, some word docs here, some bits of code there and some of all of it may or may not be in a position where you want to hit the Save button or do a "Save As" but hey never mind you're off out for a few hours...

...while you were out, Windows Update helpfully downloaded and installed a couple of fixes for your machine and now it needs to reboot the machine in order to complete the update. This is all fine but you're not there, and so Vista pops up a dialog giving the user the option to "Restart Now" or "Postpone" and a little countdown timer. When the timer hits 0 your box will restart automatically.

The number of times in the past I've come back from being out to see that login screen staring at be smugly and then logged in only to notice that it's actually "Logging In" rather than being "Unlocked" (the no apps running in the taskbar is a clue.)

It makes me SO angry, how many of these updates are SO IMPORTANT that they need to be fully applied to the machine RIGHT NOW??? Are there really hoards of 1337 barbarian hackers out there just waiting to take advantage of some vulnerability in CALC.EXE??? No, it's one of those broad stroke, treat all users like they're idiots policies that MS seem to adopt occasionally with security.

Anyway, a while ago I found a solution, but I keep forgetting what it is and after doing a google search yesterday to try to track it down again I found a lot of dangerously incorrect advice on various blogs, the worst of which were people advising that users disable automatic updates altogether This, is wrong! So in the interests of having the info somewhere I know I can find it, and in the hopes that it might be useful to others I'm going to describe what I like to call the "middle ground approach". That being, you still get the prompts by Vista, and you can postpone the prompts for 10 minutes or 4 hours and after that time it will nag you again but it WILL NOT automatically reboot your machine and cause that "blood draining from face" moment you get when you realise that you've lost half a days unsaved work.

Anyway it's quite simple.

It's worth noting two things though:

...while you were out, Windows Update helpfully downloaded and installed a couple of fixes for your machine and now it needs to reboot the machine in order to complete the update. This is all fine but you're not there, and so Vista pops up a dialog giving the user the option to "Restart Now" or "Postpone" and a little countdown timer. When the timer hits 0 your box will restart automatically.

The number of times in the past I've come back from being out to see that login screen staring at be smugly and then logged in only to notice that it's actually "Logging In" rather than being "Unlocked" (the no apps running in the taskbar is a clue.)

It makes me SO angry, how many of these updates are SO IMPORTANT that they need to be fully applied to the machine RIGHT NOW??? Are there really hoards of 1337 barbarian hackers out there just waiting to take advantage of some vulnerability in CALC.EXE??? No, it's one of those broad stroke, treat all users like they're idiots policies that MS seem to adopt occasionally with security.

Anyway, a while ago I found a solution, but I keep forgetting what it is and after doing a google search yesterday to try to track it down again I found a lot of dangerously incorrect advice on various blogs, the worst of which were people advising that users disable automatic updates altogether This, is wrong! So in the interests of having the info somewhere I know I can find it, and in the hopes that it might be useful to others I'm going to describe what I like to call the "middle ground approach". That being, you still get the prompts by Vista, and you can postpone the prompts for 10 minutes or 4 hours and after that time it will nag you again but it WILL NOT automatically reboot your machine and cause that "blood draining from face" moment you get when you realise that you've lost half a days unsaved work.

Anyway it's quite simple.

- Go to the start menu, and in the search type "gpedit.msc" Then hit enter. The Group Policy Object Editor should appear.

- Navigate to "Computer Configuration\Administrative Templates\Windows Components\Windows Update"

- In the list on the right look for the following setting: "No auto-restart for scheduled Automatic Updates installation." Double-click this and change the configured value to "Enabled" then click OK.

It's worth noting two things though:

- If you're on a domain, it's likely that domain policies will override anything you set here even if you are allowed to access the setting in the first place.

- It appears that when the dialog pops up, it gets focus and the "Restart Now" button is highlighted. So if you're typing and you happen to hit "Enter", "Space" or "n" it will be effectively clicking the "Restart Now" button...

Wednesday, January 09, 2008

Vista SP1 - The Windows Update route

As a self proclaimed "windows guy" I'm really looking forward to the arrival of Service Pack 1 for Vista. As has already been posted here one of the most eagerly awaited improvements are in the networking and file transfer stacks. I have quite a few boxes on the wlan at home and I've taken to transferring large numbers of files using an external disk which is hardly ideal. MS have published information about the contents of the SP1 release here and by all accounts local network file copies should have the following improvements:

Other fixes that interest me are:

An alternative to downloading the full thing, particularly if you're the sort of user who keeps his machine up-to-date with fixes is to just let Windows Update (Microsoft Update) apply the Service Pack, apparently this weighs in at a much nicer and lightweight 65MB as it is able to detect what fixes have already been installed and will apply the bits that haven't.

- 25% faster when copying files locally on the same disk on the same machine

- 45% faster when copying files from a remote non-Windows Vista system to a SP1 system

- 50% faster when copying files from a remote SP1 system to a local SP1 system

Other fixes that interest me are:

- Improves power consumption and battery life.

- Improves the speed of adding and extracting files to and from a compressed (zipped) folder.

- Improves the speed of copying files, folders, and other media.

- Improves startup and resume times when using ReadyBoost

An alternative to downloading the full thing, particularly if you're the sort of user who keeps his machine up-to-date with fixes is to just let Windows Update (Microsoft Update) apply the Service Pack, apparently this weighs in at a much nicer and lightweight 65MB as it is able to detect what fixes have already been installed and will apply the bits that haven't.

Do you have DADD?

Are you like me? Do you tend to drift off and play around when you are doing something that either doesn't challenge or interest you?

If so, then you have DADD or Developer Attention Deficit Disorder!

I can't claim this as my own I heard it on the latest Polymorphic Podcast. Well I sort of modified it a little but you get the jist.

If so, then you have DADD or Developer Attention Deficit Disorder!

I can't claim this as my own I heard it on the latest Polymorphic Podcast. Well I sort of modified it a little but you get the jist.

Tuesday, January 08, 2008

Enjoy what you do?

I have a couple of questions for you.

1) On a Sunday night, are you dreading going to work on Monday?

2) Do you look at the clock every 5 minutes waiting for the time to leave?

3) Are you bored?

If you answered yes to any of those questions above, why?

Software Development should be about passion. You should love what you do, look forward to going in, and when doing it not even realise it’s been 4 hours since you last looked up. Right? Right?

I firmly believe that if you don’t enjoy what you are doing, then you shouldn’t be doing it. It amazes me how people stick in a job they hate just because it is comfortable, or they don’t like change.

If you hate your job, do yourself a favour. Get another one. You wouldn’t spend 40 hours a week bashing your head with a hammer, so why have the same feeling in a sucky job?

I know a lot of people don’t like change. They don’t like having to learn new processes, or meet new people – that awkward few weeks where everybody asks you the same question. I guess deep down, some people are scared that they aren’t as good as they think and could be shown up in a new job?

I’m a contractor and change jobs, on average, every three or four months. If you choose to look at change as a positive, growth experience, and get excited about becoming a better developer, or share your knowledge with others, then it can be a positive thing.

Make 2008 the year you find the passion for development again.

1) On a Sunday night, are you dreading going to work on Monday?

2) Do you look at the clock every 5 minutes waiting for the time to leave?

3) Are you bored?

If you answered yes to any of those questions above, why?

Software Development should be about passion. You should love what you do, look forward to going in, and when doing it not even realise it’s been 4 hours since you last looked up. Right? Right?

I firmly believe that if you don’t enjoy what you are doing, then you shouldn’t be doing it. It amazes me how people stick in a job they hate just because it is comfortable, or they don’t like change.

If you hate your job, do yourself a favour. Get another one. You wouldn’t spend 40 hours a week bashing your head with a hammer, so why have the same feeling in a sucky job?

I know a lot of people don’t like change. They don’t like having to learn new processes, or meet new people – that awkward few weeks where everybody asks you the same question. I guess deep down, some people are scared that they aren’t as good as they think and could be shown up in a new job?

I’m a contractor and change jobs, on average, every three or four months. If you choose to look at change as a positive, growth experience, and get excited about becoming a better developer, or share your knowledge with others, then it can be a positive thing.

Make 2008 the year you find the passion for development again.

Another year, another resolution

Well, that’s another year buggered. It’s been a pretty big one for us personally. My more regular (or antipodeans) readers will know that my wife, daughter and I packed up sticks and moved to the Uk in March. It’s been a pretty big change, and save for a few moments of reoccurring home sickness (well NZ IS the best country in the world) it’s been a pretty good year. I feel I have grown as a developer, and have seen some awesome things, and met some pretty cool people. I expect this year to be just as big a year from a personal growth point of view.

Except in one area - Weight.

Over the past three or four years I have managed to go from around 85kg to 105kg which is where I was at last weigh in. There are a couple of key reasons for this I think. First and foremost is I’m not exercising as much as I used to. I used to cycle up to 10 hours a week at my peak. I think I would have ridden my bike 30 or 40 times in 2007. Bad.

The other is a combination of laziness, a liking of sweet things and convenience. Oh and the bloody British habit of having a vending machine in every work place!

That was then, this is now.

I think resolutions are rubbish. It’s easy to SAY you will do something, and then trip up. Trust me, I’ve done it. As I’m sure most people have. So, I am not going to make a resolution. I going to give my word (to myself) that I will do the following this year:

I will stop eating the following:

Chips (crisps)

Biscuits

Anything chocolate related

Reduce beer intake, if drinking then try Bubbly or Whiskey/Bourbon

Reduce portion sizes for evening meal

I will get at least 4 forms of exercise per week. Before Xmas I was doing a bit of running so intend to keep that up, and maybe dust off the elliptical trainer.

As my word is my bond I will stick by what I start.

I guess at this point I need a goal. I’m not going to make the mistake of setting a target weight. It’s too easy to either get discouraged, or complacent. My initial goal is to commit – truly commit to the above for one month and take it from there. I think I will use the cold turkey approach, rather than the weight watchers approach of rewarding success. So for the next month, good bye to all rubbish food, and hello running shoes.

I’ll report back in a month. Feel free to join with me on this endeavour. All it needs is a strong will.

Except in one area - Weight.

Over the past three or four years I have managed to go from around 85kg to 105kg which is where I was at last weigh in. There are a couple of key reasons for this I think. First and foremost is I’m not exercising as much as I used to. I used to cycle up to 10 hours a week at my peak. I think I would have ridden my bike 30 or 40 times in 2007. Bad.

The other is a combination of laziness, a liking of sweet things and convenience. Oh and the bloody British habit of having a vending machine in every work place!

That was then, this is now.

I think resolutions are rubbish. It’s easy to SAY you will do something, and then trip up. Trust me, I’ve done it. As I’m sure most people have. So, I am not going to make a resolution. I going to give my word (to myself) that I will do the following this year:

I will stop eating the following:

Chips (crisps)

Biscuits

Anything chocolate related

Reduce beer intake, if drinking then try Bubbly or Whiskey/Bourbon

Reduce portion sizes for evening meal

I will get at least 4 forms of exercise per week. Before Xmas I was doing a bit of running so intend to keep that up, and maybe dust off the elliptical trainer.

As my word is my bond I will stick by what I start.

I guess at this point I need a goal. I’m not going to make the mistake of setting a target weight. It’s too easy to either get discouraged, or complacent. My initial goal is to commit – truly commit to the above for one month and take it from there. I think I will use the cold turkey approach, rather than the weight watchers approach of rewarding success. So for the next month, good bye to all rubbish food, and hello running shoes.

I’ll report back in a month. Feel free to join with me on this endeavour. All it needs is a strong will.

Monday, January 07, 2008

Pre Mac World 2008 Rumour #1

A docking station / iMac

If this is true, the lines starts here. I love my MBP, but have always wanted more screen real estate when at home. Oh please Apple make this a reality.

If this is true, the lines starts here. I love my MBP, but have always wanted more screen real estate when at home. Oh please Apple make this a reality.

Design Patterns are for life not just for Christmas

I was a candidate in an interview for a contract position the other day and as luck would have it, it turned out to be the type of format that I enjoy which is the one where the whole thing turns into an hour long chat about software related issues rather than some kind of technical test or one of the interviewers having a list of questions that they want to ask. This usually occurs when the interviewers are mainly techie types and I find (having been on the other side of the desk a few times) that it can be particularly effective when you're reasonably happy with the interviewee's credentials, but want to find out how they tick, and whether they would fit into the team.

At one point, the discussion moved onto Patterns, and specifically Design Patterns, which in other times I would have probably started my standard spiel about how the GoF book was required reading at Uni and that they were something that I'd found myself evangelising at places I'd worked quite early on.

But on this occasion, I didn't do that. I launched into a bit of a sermon.

The reason for that is that lately, I've started to become more and more dissilusioned with the whole Design Patterns paradigm. When trying to work out why this is I thought about the large number of projects that I have worked on recently where there has been overenthusiastic promotion of solutions containing frankly preposterous implementations of design patterns in situations where a simple, cleanly designed solution would have done the job just fine.

Some of the opponents to design patterns claim that the use of them highlights weaknesses or failures of a particular language to provide certain types of funcionality. I don't really go for that. Where I see the problem is that with relativley inexperienced developers, patterns are a toolbox of huge blunt instruments which they can use to take the lazy route to producing software. Design Patterns take a lot of the thought out of the design process round about the time when the more thought you can put into the design, the better it's going to be.

Im my own head, I see patterns as handy chunks of solution which my mind can insert into a design as a kind of box, so if the requirements state that an application needs to have pluggable components then I can automatically pull out this reusable concept of say an Abstract Factory from the pattern store rather than having to think about all the nuts and bolts of the specific implementation.

Where it goes wrong is when the requirements do not state that the application needs to support pluggable components but the developer putting together the design starts to think "we could make this pluggable, and so we'll have an Abstract Factory here, and we'll have Builders to construct the objects and this bit would be cool as a singleton, and we'll use Adapters to make them work with this part..."

What happens next is that the weaknesses of the language kick in. With C# for example, none of this comes for free, so we're talking hundreds and hundreds of extra lines of code to implement the pattern functionality which we never needed in the first place. This code has to be tested, reviewed and maintained at an additional cost, and for what? So that the hot-shot developer can show everyone how clever they are, and you can be certain that barely a line of it will live up to the subtitle of the GoF book "elements of reusable OO software."

And so I have to ask, is it about time we started to rethink our approach to design and Design Patterns and accept that they are very powerful abstractions, but come with a cost that needs to be weighed against the benefits of being able to draw a nice box on our design? We've had some fun with them, but with an increasing number of well intentioned but ill-informed PMs starting to evangelise about the benefits of Design Patterns, it's time we started to concentrate on good, solid, clean solutions, rather than working out which toys we can cram in.

At one point, the discussion moved onto Patterns, and specifically Design Patterns, which in other times I would have probably started my standard spiel about how the GoF book was required reading at Uni and that they were something that I'd found myself evangelising at places I'd worked quite early on.

But on this occasion, I didn't do that. I launched into a bit of a sermon.

The reason for that is that lately, I've started to become more and more dissilusioned with the whole Design Patterns paradigm. When trying to work out why this is I thought about the large number of projects that I have worked on recently where there has been overenthusiastic promotion of solutions containing frankly preposterous implementations of design patterns in situations where a simple, cleanly designed solution would have done the job just fine.

Some of the opponents to design patterns claim that the use of them highlights weaknesses or failures of a particular language to provide certain types of funcionality. I don't really go for that. Where I see the problem is that with relativley inexperienced developers, patterns are a toolbox of huge blunt instruments which they can use to take the lazy route to producing software. Design Patterns take a lot of the thought out of the design process round about the time when the more thought you can put into the design, the better it's going to be.

Im my own head, I see patterns as handy chunks of solution which my mind can insert into a design as a kind of box, so if the requirements state that an application needs to have pluggable components then I can automatically pull out this reusable concept of say an Abstract Factory from the pattern store rather than having to think about all the nuts and bolts of the specific implementation.

Where it goes wrong is when the requirements do not state that the application needs to support pluggable components but the developer putting together the design starts to think "we could make this pluggable, and so we'll have an Abstract Factory here, and we'll have Builders to construct the objects and this bit would be cool as a singleton, and we'll use Adapters to make them work with this part..."

What happens next is that the weaknesses of the language kick in. With C# for example, none of this comes for free, so we're talking hundreds and hundreds of extra lines of code to implement the pattern functionality which we never needed in the first place. This code has to be tested, reviewed and maintained at an additional cost, and for what? So that the hot-shot developer can show everyone how clever they are, and you can be certain that barely a line of it will live up to the subtitle of the GoF book "elements of reusable OO software."

And so I have to ask, is it about time we started to rethink our approach to design and Design Patterns and accept that they are very powerful abstractions, but come with a cost that needs to be weighed against the benefits of being able to draw a nice box on our design? We've had some fun with them, but with an increasing number of well intentioned but ill-informed PMs starting to evangelise about the benefits of Design Patterns, it's time we started to concentrate on good, solid, clean solutions, rather than working out which toys we can cram in.

Subscribe to:

Posts (Atom)